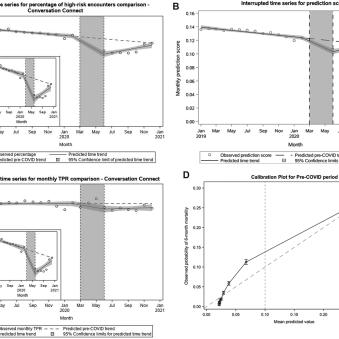

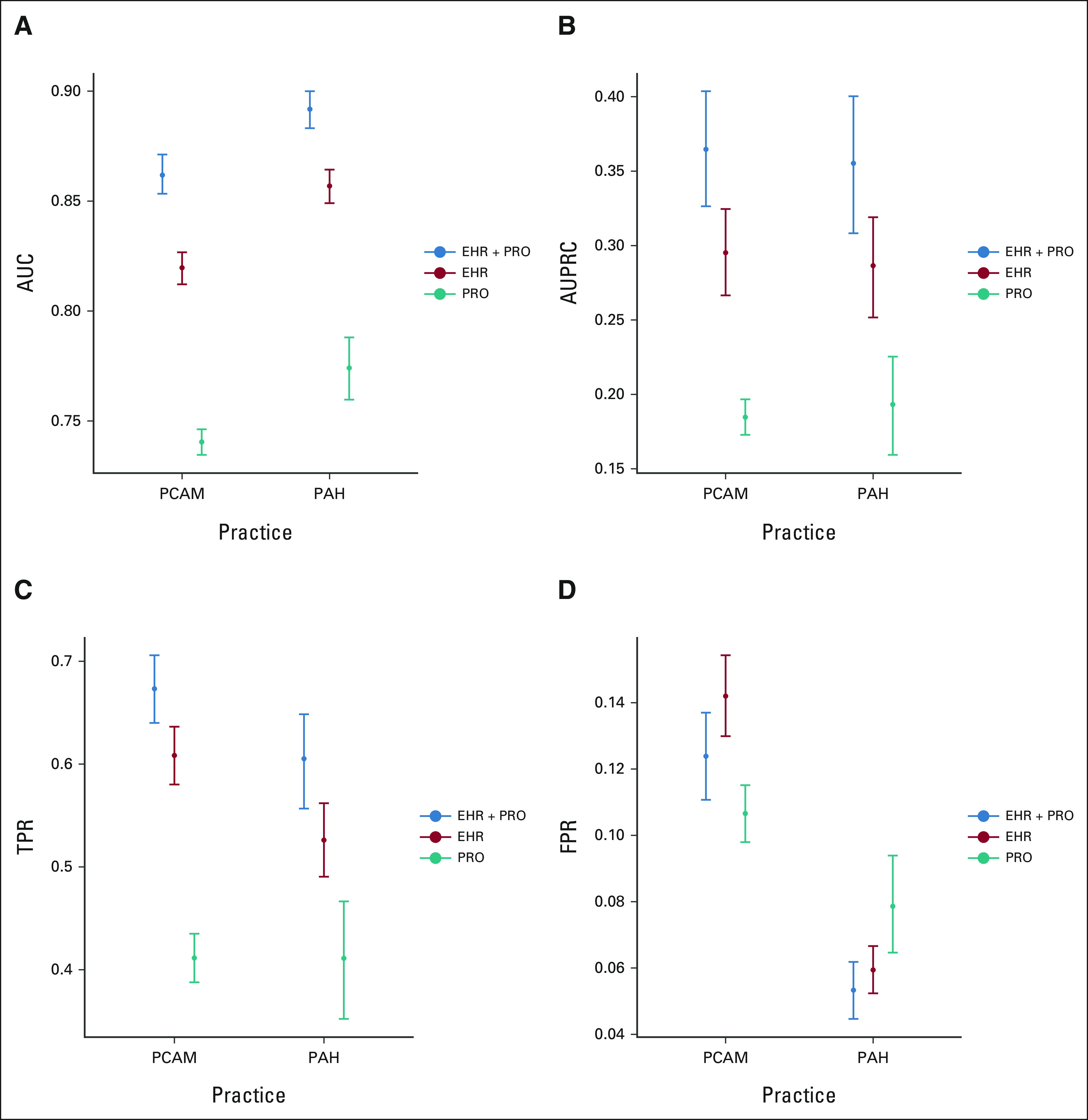

Trustworthiness is critical to the next generation of AI in healthcare. Much of the value of AI in healthcare is the ability to outperform traditional rule-based algorithms – and potentially even trained physicians and end-users – in diagnostic and prognostic tasks. As AI becomes more available in health operations, a key barrier to widespread adoption is trustworthiness, or lack thereof. The National Institute of Standards and Technology has defined 7 essential building blocks of AI trustworthiness: validity and reliability, safety, security and resiliency, accountability and transparency, explainability, privacy, and fairness. We pursue foundaitonal research with operational or hypothetical predictive algorithms to ensure that existing algorithms adhere to these standards.